5. June 2025 By Attila Papp

Data Quality Testing on Modern Data Platforms

Data volumes, velocities, and varieties are forever growing, but decision-makers no longer tolerate "close enough" effort on data quality. If a machine-learning model forecasts demand with the wrong currency, KPIs or dashboards may double-count revenue due to duplicate primary keys, which erodes trust. Modern data architectures, like domain-oriented data, decentralize data ownership. Therefore, quality responsibility is expected to decentralize right alongside them. That means every dataset should ship with (i) a machine-readable contract describing what "good" means, (ii) an automated test suite that proves it, and (iii) a catalog or governance layer that surfaces the resulting score so consumers can decide whether to rely on it.

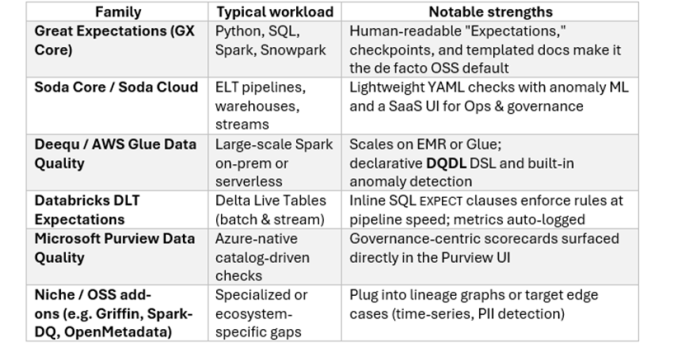

Common Data-Quality Frameworks (2025 Snapshot)

Pragmatic pattern: pick one rule engine (GX, Soda, Deequ) for authoring & CI, plus one observability plane (Purview, DataZone, Unity Catalog, DataHub) for cataloging the results.

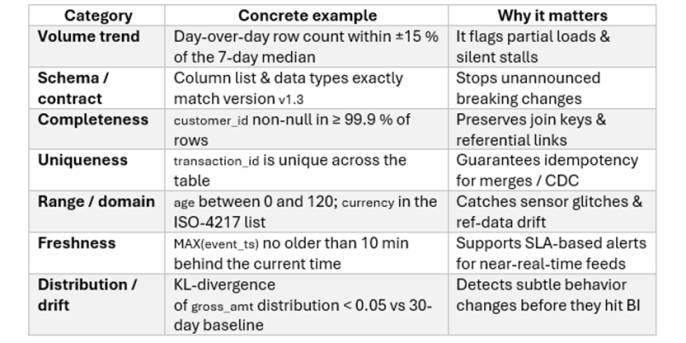

Rules That Catch 80 % of Real-World Incidents

Organizations often have so-called platform teams that provide blueprints and other platform-related tools and utilities to developers. It is my observation that there are a few rules that can catch most of the data quality issues, so according to the Pareto principle, 80% of data quality issues can be covered with the following tests (with 20% of the effort).

Therefore, it is recommended to implement inexpensive structural checks on every micro-batch and reserve more comprehensive statistical drift analyses for longer-running schedules.

Implementing Quality on the Big Three Clouds & in a Data Mesh

Azure Data Lakehouse (Synapse + Fabric)

- Execution points – Synapse pipelines and Fabric dataflows include Assert steps; Spark notebooks run GX/Soda; Microsoft Purview ingests results into catalog scorecards Microsoft Learn.

- Reference workflow

- The YAML rule file resides beside the pipeline JSON in Git.

- Azure Pipelines job spins up a small Spark cluster and runs GX tests on a PR sample.

- Production pipeline re-executes rules; failing partitions are tagged "quarantine" in ADLS.

- Purview displays a "Quality Score" badge; Purview policies can block export if the score < 95 %.

AWS Data Lake (S3 + Glue)

- Execution points – AWS Glue Data Quality or PyDeequ on EMR; rules authored in DQDL docs.aws.amazon.com.

- Reference workflow

- DQDL stored next to CDK/Terraform IaC.

- Glue job "stage-0" validates raw files; failures send EventBridge alerts and write delete files in Iceberg to mask bad rows.

- Metrics stream to CloudWatch, then to QuickSight and AWS DataZone lineage graphs.

- Lake Formation tag-based policies can deny reads on any partition with status = FAIL.

Databricks Lakehouse (Any Cloud)

- Execution points – Delta Live Tables (DLT) Expectations for streaming/batch pipelines; Delta constraints for hard enforcement docs.databricks.com.

- Reference workflow

- Developer writes EXPECT id IS NOT NULL ON VIOLATION FAIL directly in SQL.

- Expectation metrics auto-land in the Unity Catalog, surfaced in the table history.

- For ad-hoc notebooks, GX or Soda libraries run against Spark tables and push results to MLflow or Unity metrics.

- Access policies can check Unity quality tags before granting BI connections.

Federated Data Mesh

A data mesh spreads data products across domains; quality, therefore, follows a "federated templates, local thresholds" model:

1. Rule library – The central platform team curates YAML/Spark/dbt macros (expect_unique_key, expect_freshness) and publishes them as a lightweight package.

2. Domain CI – Each repo inherits a GitHub Actions template that runs the library on sample data.

3. Contract registry – Catalog (DataHub, Purview, DataZone) serves as the source of truth for schemas, rules, and SLOs; versions drive impact analysis and pull requests in ThoughtSpot.

4. Governance view – The mesh-wide dashboard displays pass-rate trends per domain; federated governance intervenes only when SLA badges trend red.

Key success factor: automated PR workflows that notify downstream owners when a contract change could break them.

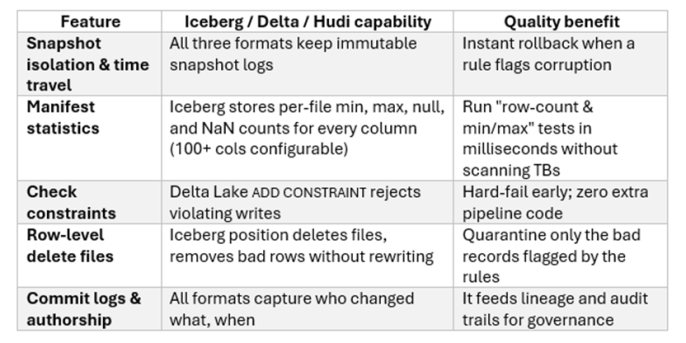

Open Table Formats

These new table formats are increasingly popular. Therefore, I investigated how we could integrate them into the data quality testing process.

It is recommended to execute metadata-only checks first (row counts, schema drift) using Iceberg/Delta "metadata tables" and then escalate to full-scan frameworks only if those "cheap" tests fail.

From Quality Signals to Governance Controls

Modern governance asks two questions: "Can I trust this data?" and "Am I allowed to use it?" Quality testing provides the measurable answer to the first and often conditions the second:

1. Event pipeline – Every rule result emits a JSON event (table, rule, status, score).

2. Catalog ingestion – Collibra, Purview, Unity Catalog, DataZone, or OpenMetadata converts those events into quality facets stored alongside schema and lineage.

3. Policy evaluation – OPA, Lake Formation, or Synapse RBAC evaluate access: ALLOW read IF quality_score >= 95 AND classification IN ('public,' 'internal').

4. Audit & lineage – When a rule fails, lineage instantly exposes every downstream dashboard; DataOps can mute alerts or roll back snapshots.

Thus, quality rules are not paperwork; they become active guardrails that automate compliance.

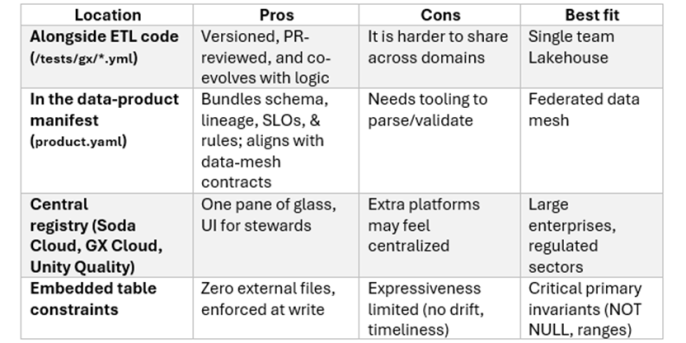

Where Should We Store the Rules (Tests)?

A Reference End-to-End Lifecycle

1. Author contract (schema + rules + SLOs) in YAML in the domain repo.

2. CI gate runs GX/Soda/Deequ on PR sample; merge is blocked due to failures.

3. The ingestion pipeline (ADF, Glue, DLT, etc.) re-executes rules on full data; bad rows are handled via Iceberg delete files or Delta constraints.

4. The metrics emitter pushes events to Kafka/EventBridge; retries and auto-quarantine reduce false positives.

5. Catalog ingestion builds quality scorecards; lineage ties failures to consumers.

6. The policy engine enforces "no read on score < 95 %" or when PII checks fail.

7. Alert routing pages on-call only after X consecutive fails; otherwise, it opens Jira for steward triage.

8. The quarterly governance review evaluates MTTR, trend lines, and rule coverage and adds new templates to the federated library.

Key Take-Aways

- Shift quality left: fail code reviews and pipelines, not BI dashboards.

- Treat rules as code: semantic versioning, Git diff, CI.

- Exploit manifest metadata: inexpensive Iceberg/Delta statistics catch many issues quickly.

- Expose trust: catalog badges turn invisible plumbing into visible product value.

- Federate thresholds centralize visibility: domains own their numbers; governance owns the watchtower.

- Automate impact analysis: contract changes open downstream PRs automatically.

Conclusion

"Modern" data platforms are only modern if they make high-quality data the default. DevOps principles, such as shift-left, can also be effectively advocated for in the context of modern data platforms, particularly in data quality testing.

Whether the workload runs on Azure Synapse, an S3 lake with Glue, a Databricks Lakehouse, or a fully federated data mesh, I would recommend the following based on my investigation:

1. Declarative, version-controlled rules that travel with each dataset.

2. Platform-native execution points (Assert, Glue DQ, DLT Expectations) backed by flexible engines (GX, Soda, Deequ).

3. Open table formats that add statistics, constraints, and time-travel rollback for free.

4. Governance catalogs that turn quality metrics into enforceable policy.

Adopt these four pillars, and you win the only metric that counts: trustworthy data delivered consistently at scale.