8. December 2023 By Kristóf Nyári

Making system design Q-uick with Amazon Q

The 2023 AWS re:Invent gave developers, analysts, and business owners many innovations to consider. One of the new services included was the generative-AI based chat tool called Amazon Q. Today, I will present the capabilities it has on making system design quicker in the AWS Cloud. To find out more about all innovations that were announced in the re:Invent, visit their official website at Announcements.

What is Amazon Q?

Amazon Q is AWS’s own take on the recent surge of generative-AI assistants. It offers help in building applications on AWS, research best practices, resolve errors, and get assistance in coding new features for your applications. Just like other AI assistants, such as ChatGPT or Bing AI, Amazon Q works on a question – answer basis: Just type your problem and it tries to give a solution.

Using Amazon Q

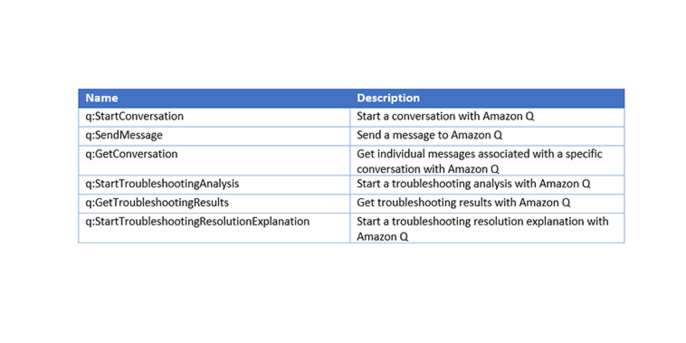

First things first, just as with any AWS service, the correct IAM permissions must be granted before being able to chit-chat. The permissions can be found in the table below:

These permissions give fine-grained access to the chatbot service, but as always, a simple * gives full access. Here is a sample IAM PolicyDocument to enable full access to Q:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowAmazonQFullAccess",

"Effect": "Allow",

"Action": [ "q:*" ],

"Resource": "*"

}

]}

Speeding up the system design process

Besides the many use cases of a generative AI assistant, we will take a look specifically at system design. I will ask Amazon Q to suggest infrastructural design solutions for different modern-day services.

1. Microservices that connect to a Kafka cluster

In the first test case, I asked Amazon Q to suggest the solution on designing an infrastructure to house a few microservices that connect to a Kafka cluster. The number of requests could be around 1M per month:

Q: I would like to deploy a micro-service based application inside AWS that connects to a Kafka cluster. There would be around 1M requests per day. Which services to use?

A: To deploy a micro-service based application inside AWS that connects to a Kafka cluster with around 1M requests per day, I would recommend the following architecture:

1. Use Amazon Managed Streaming for Apache Kafka (Amazon MSK) to host the Kafka cluster. MSK is a fully managed service that makes it easy to set up and operate Apache Kafka clusters in the cloud.

2. Host the microservices on Amazon Elastic Container Service (ECS) or Amazon Elastic Kubernetes Service (EKS). ECS and EKS provide scalable container orchestration capabilities.

3. Use Amazon Virtual Private Cloud (VPC) to provide network isolation and connectivity between the microservices and MSK cluster.

4. Load balance traffic to the microservices using an Application Load Balancer.

5. Monitor application and infrastructure metrics using Amazon CloudWatch.

6. Autoscale the microservices as needed using AWS Auto Scaling to handle the 1M daily requests.

This architecture leverages fully managed AWS services like MSK, ECS/EKS and load balancers to deploy and operate the microservices at scale reliably and securely in AWS.

As seen from the demo, Amazon Q suggested a rough estimate of the microservice architecture within AWS. The chatbot also provided the corresponding documentation from the official AWS website for the given services.

2. Edge APIs with High Availability

Next up, I wanted to see how Q would generate an infrastructure plan regarding multiple APIs that serve users all around the globe. The main things I wanted to check if it would suggest Edge functions (Lambda@Edge) and Multi-AZ solutions as well as caching.

Q: Could you suggest an infrastructure that could accommodate several APIs that serve users all around the globe with minimal downtime.

A: Here is an infrastructure that could accommodate several APIs that serve users all around the globe with minimal downtime:

Deploy API endpoints in multiple AWS Regions using Amazon API Gateway. This allows your APIs to be closer to users in different parts of the world for lower latency.

Use AWS Lambda along with API Gateway to run application code and logic separately from the API infrastructure. Lambda functions can be deployed in each Region.

Store and synchronize user and application data across Regions using a database like Amazon Aurora with global database enabled. This keeps data consistent everywhere.

Use Amazon Route 53 for DNS resolution and routing users to the Region with the lowest latency. You can also use Lambda@Edge to query Route 53 for optimal Region routing.

Configure API Gateway endpoints behind Amazon CloudFront for caching and improved performance. CloudFront will route requests to the nearest API Gateway endpoint.

Monitor APIs for errors, latency and usage using API Gateway metrics and CloudWatch. This helps identify issues and optimize performance.

Scale infrastructure automatically using auto scaling groups for EC2 instances or provisioned concurrency for Lambda functions to handle varying load.

As it can be seen from the provided answer, the chatbot could suggest an infrastructure that satisfies all the requirements I wanted to include. The multi-region solution with API Gateways and a central Aurora DB is quite sophisticated.

3. Dropbox-like highly available file-sharing app

Lastly, I wanted to check how Amazon Q would handle a request for an online file-sharing service that would retain files for a year with high availability.

Q: Design an infrastructure that could house an online, containerized, highly available file-sharing app with multi-AZ backups and persistence for at least a year. Also, encrypted sharing of files would be required. (Such as Dropbox)

A: Here is an infrastructure design for the file sharing app requirements:

Use Amazon S3 for primary file storage which provides durable object storage across multiple Availability Zones for redundancy and persistence for over a year with lifecycle policies.

Deploy the application servers using Amazon ECS on EC2 instances behind an Application Load Balancer for scalability. Use containerized application architecture.

Use Amazon EFS for shared file storage between containers. EFS is elastic and can scale throughput and capacity independently as needed.

Encrypt files at rest using S3 default encryption and EFS encryption.

Use AWS Identity and Access Management (IAM) to control access to S3 and EFS resources through fine-grained permissions.

Take EFS file system snapshots daily and store in S3 for versioning and point-in-time recovery up to a year.

Deploy containers across multiple Availability Zones for high availability. Use Elastic Load Balancing and Auto Scaling Groups to scale the application.

Monitor infrastructure using services like CloudWatch and integrate with notification services.

The Dropbox-like solution is also quite sophisticated, however a major change I would make is encrypting the S3 files and EFS storage via AWS SSE-KMS (Server-Side-Encryption via the KMS service) instead of default encryption. This would allow for even more security within the file-sharing app that could possibly host sensitive information or PII.

Closing thoughts

After trying to my hand at different infrastructural designs, I have yet to find any major misguidance or bad practice in AWS’s new chatbot. Although, it is only limited to the AWS cloud, this service allows AWS Cloud engineers to fasten their system design process and achieve more robust, highly-available and secure services within the public cloud.

I would suggest trying Amazon Q out, since not only is it capable of suggesting infrastructure designs, but also troubleshoot issues and analyse service usage within AWS. As of now, programmatic access is only available through REST API calls, which enables developers to automate Q functionality within their programs.

As a final thought, this service alone does not replace strong knowledge of system design, nor will it replace us in the long term, but it definitely helps speed up certain processes.